Do you want BuboFlash to help you learning these things? Or do you want to add or correct something? Click here to log in or create user.

Tags

#deeplearning #fastai #has-images #initialization #kaiming #lesson_8

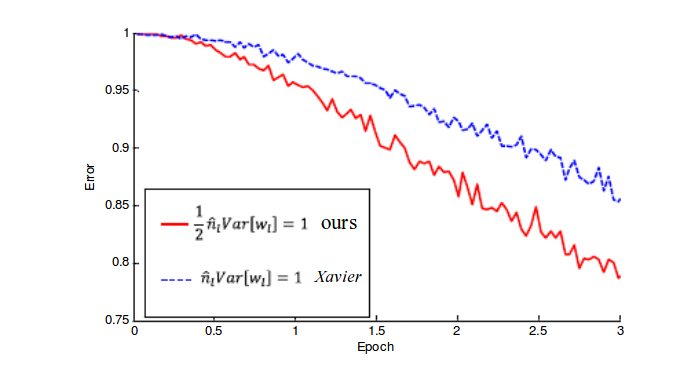

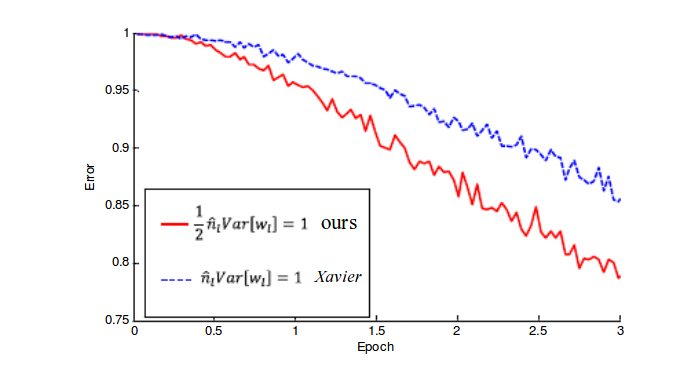

The convergence of a 22-layer large model. We use ReLU as the activation for both cases. Both our initialization (red) and “Xavier” (blue) [7] lead to convergence, but ours starts reducing error earlier.

Tags

#deeplearning #fastai #has-images #initialization #kaiming #lesson_8

Tags

#deeplearning #fastai #has-images #initialization #kaiming #lesson_8

If you want to change selection, open document below and click on "Move attachment"

Summary

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Details

No repetitionsDiscussion

Do you want to join discussion? Click here to log in or create user.