Edited, memorised or added to reading queue

on 23-Jun-2022 (Thu)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

Flashcard 7093166148876

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7096368762124

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itDisadvantags of survey-based CX measurement 2. Reactive: Surveys are a backward-looking tool in a world where customers expect their concerns to be resolved increasingly quickly.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096370597132

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

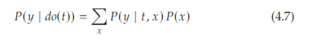

Open itWe assumed 𝑋 is discrete when we summed over its values, but we can simply replace the sum with an integral if 𝑋 is continuous. Throughout this book, that will be the case, so we usually won’t point it out To jest kluczowe równanie.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096372432140

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itBuilding on the structural approach to causality introduced by Haavelmo (1943) and the graph-theoretic framework proposed by Pearl (1995), the artificial intelligence (AI) literature has developed a wide array of techniques for causal learning that allow le

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itFor example, suppose that the causal DAG includes an unmeasured common cause of A and Y, U and also a measured variable L that is an effect of U. In those cases, it is generally better to adjust for L, because even though adjusting for L will not eliminate all confounding by U, it will typically eliminate some of the confounding

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096376102156

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itmore covariates could lead to a higher chance of satisfying unconfoundedness, it can lead to a higher chance of violating positivity. As we increase the dimension of the covariates, we make the <span>subgroups for any level 𝑥 of the covariates smaller. <span>

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096377937164

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Flashcard 7096379772172

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itnot only is association not causation, but causation is a sub-category of association. That’s why association and causation both flow along directed paths.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096381607180

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Flashcard 7096384490764

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itn the confounders that block the backdoor path. If those data are available, then the choice of one of these methods over the others is often a matter of personal taste. Unless the treatment is <span>time-varying -- then we have to go to G-methods <span>

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itThe causal graph for interventional distributions is simply the same graph that was used for the observational joint distribution, but with all of the edges to the intervened node(s) removed. This is because the probability for the intervened factor has been set to 1, so we can just ignore that factor (this is the focus of the next section). Another way to see that the intervened node has no causal parents is that the intervened node is set to a constant value, so it no longer depend

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7096388422924

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itOf course, this assumption could be violated. For example, if the treatment is “get a dog” and the outcome is my happiness, it could easily be that my happiness is influenced by whether or not <span>my friends get dogs because we could end up hanging out more to have our dogs play together <span>

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |