Edited, memorised or added to reading queue

on 05-Jun-2024 (Wed)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7629495209228

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Open it

# Import the sigmoid function from scipy from scipy.special import expit as sigmoid # Weight from the model weight = 0.14 # Print the approximate win probability predicted close game print(sigmoid(1 * 0.14)) # Print the approximate win probability predicted blowout gam

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itAs the number of hyperparameters and their range grow, the search space becomes exponentially complex, and tuning the models manually or by grid-search becomes impractical . Bayesian optimization for hyperparameter tuning provides hyperparameters (step 1) iteratively based on previous performance (Shahriari, Swersky, Wang, Adams, & De Freitas, 2015). We use Bayesian optimization to search the hyperparameter space for our model extensively.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629499403532

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itThe Long Short-Term Memory, or LSTM, network is a type of Recurrent Neural Network.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629500976396

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itFor example, suppose that the causal DAG includes an unmeasured common cause of A and Y, U and also a measured variable L that is an effect of U.

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itust enough software development skills to be able to write modular code and implement unit tests. They don’t need to know about the intricacies of non-blocking asynchronous messaging brokering. <span>They need just enough data engineering skills to build (and schedule the ETL for) feature datasets for their models, but not to construct a petabyte-scale streaming ingestion framework. They need just enough visualization skills to create plots and charts that communicate clearly what their research and models are doing, but not to develop dynamic web apps that have co

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629509889292

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

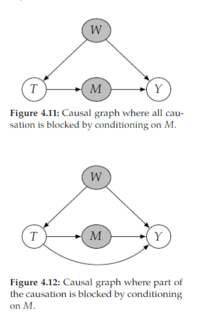

Parent (intermediate) annotation

Open itThere are two categories of things that could go wrong if we condition on descendants of 𝑇: 1. We block the flow of causation from 𝑇 to 𝑌. 2. We induce non-causal association between 𝑇 and 𝑌.