Edited, memorised or added to reading queue

on 25-Oct-2024 (Fri)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7629871910156

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

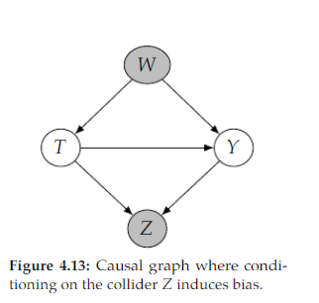

Open itConsider the following general kind of path, where → · · · → denotes a directed path: 𝑇 → · · · → 𝑍 ← · · · ← 𝑌 . Conditioning on 𝑍 , or any descendant of 𝑍 in a path like this, will induce collider bias

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itadvancements in design patterns for structured data evolved with the introduction of the wide-and-deep model pattern, outlined in “Wide & Deep Learning for Recommender Systems” (https://arxiv.org/abs/1606.07792) by Heng- Tze Cheng et. al, at the technology-agnostic research group Google Research,

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

TfC_01_FINAL_EXAMPLE.ipynb

he right shape Scale features (normalize or standardize) Neural networks tend to prefer normalization. Normalization - adjusting values measured on different scales to a notionally common scale <span>Normalization # Start from scratch import pandas as pd import matplotlib.pyplot as plt import tensorflow as tf ## Borrow a few classes from sci-kit learn from sklearn.compose import make_column_transformer from sklearn.preprocessing import MinMaxScaler, OneHotEncoder from sklearn.model_selection import train_test_split #Create column transformer ct = make_column_transformer((MinMaxScaler(), ['age', 'bmi', 'children']), # turn all values in these columns between 0 and 1 (OneHotEncoder(handle_unknown='ignore', dtype="int32"), ['sex', 'smoker', 'region'])) # Create X and y X = insurance.drop('charges', axis=1) y = insurance['charges'] # Split datasets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Fit the column transformer on training data and apply to both datasets (train and test) ct.fit(X_train) # Transform data X_train_normalize = ct.transform(X_train) X_test_normalize = ct.transform(X_test) <span>

Flashcard 7662797720844

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Tensors indexing

# Tensors can be indexed just like Python lists. # Get the first 2 elements of each dimension A[:2, :2, :2, :2]

Flashcard 7662799555852

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itolder the a certain date, for example, a maildir folder with 5 years worth of email, and you want to delete everything older then 2 years, then run the following command. find . -type f -mtime <span>+730 -maxdepth 1 -exec rm {} \; <span>

Original toplevel document

How to delete all files before a certain date in LinuxHow to delete all files before a certain date in Linux Posted on: September 15, 2015 If you have a list of files, but you only want to delete files older the a certain date, for example, a maildir folder with 5 years worth of email, and you want to delete everything older then 2 years, then run the following command. find . -type f -mtime +XXX -maxdepth 1 -exec rm {} \; The syntax of this is as follows. find – the command that finds the files . – the dot signifies the current folder. You can change this to something like /home/someuser/mail/somedomain/

Flashcard 7662801390860

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itThe LSTM expects input data to have the dimensions: samples, time steps, and features.