Edited, memorised or added to reading queue

on 31-Oct-2024 (Thu)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

Flashcard 7629895503116

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itWhenever, do(𝑡) appears after the conditioning bar, it means that everything in that expression is in the post-intervention world where the intervention do(𝑡) occurs.

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open it1.4.1 LSTM Weights A memory cell has weight parameters for the input, output, as well as an internal state that is built up through exposure to input time steps. Input Weights. Used to weight input for the current time step. Output Weights. Used to weight the output from the last time step. Internal State. Internal state used in the calculation of the output for this time step

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7649822379276

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itIf the number of input and output time steps vary, then an Encoder-Decoder architecture can be used. The input time steps are mapped to a fixed sized internal representation of the sequence, then this vector is used as input to producing each time step in the output sequence

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itdata scientists and engineers are expected to build end-to-end solutions to business problems, of which models are a small but important part. Because this book focuses on end-to-end solutions, we say that the data scientist’s job is to build data science applications.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7663768440076

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

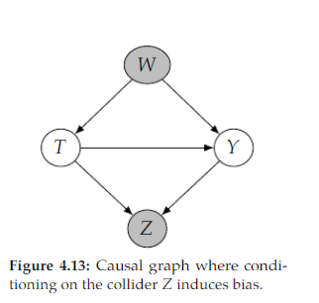

Open itthe causal effect estimate will be biased by the non-causal association that we induce when we condition on 𝑍 or any of its descendants

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7663770275084

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itTypical workflow: build a model -> fit it -> evaulate -> tweak -> fit > evaluate -> ....

Original toplevel document

TfC 01 regressionactivation functions # 2. Compiling: change optimizer or its parameters (eg. learning rate) # 3. Fitting: more epochs, more data ### How? # from smaller model to larger model Evaluating models <span>Typical workflow: build a model -> fit it -> evaulate -> tweak -> fit > evaluate -> .... Building model: experiment Evaluation model: visualize What can visualize? the data model itself the training of a model predictions ## The 3 sets (or actually 2 sets: training and test

Flashcard 7663772110092

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itThe problem here is that by default scales::percent() multiplies its input value by 100. This can be controlled by the scale parameter.

Original toplevel document

Open itSomething is not right here! 4000%!? That seems a bit excessive. The problem here is that by default scales::percent() multiplies its input value by 100. This can be controlled by the scale parameter. scales::percent(100, scale = 1) Copy ## [1] "100%" However, scale_y_continuous() expects a function as input for its labels parameter not the actual labels itself. Thus, using percent() is not an option anymore. Fortu

Flashcard 7663773682956

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itThe problem here is that by default scales::percent() multiplies its input value by 100. This can be controlled by the scale parameter.

Original toplevel document

Open itSomething is not right here! 4000%!? That seems a bit excessive. The problem here is that by default scales::percent() multiplies its input value by 100. This can be controlled by the scale parameter. scales::percent(100, scale = 1) Copy ## [1] "100%" However, scale_y_continuous() expects a function as input for its labels parameter not the actual labels itself. Thus, using percent() is not an option anymore. Fortu