Edited, memorised or added to reading queue

on 23-Oct-2024 (Wed)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

Flashcard 7629770984716

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itconsistency encompasses the assumption that is sometimes referred to as “no multiple versions of treatment.”

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629863783692

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itthe most important take-home message: we need expert knowledge to determine if we should adjust for a variable. The statistical criteria are insufficient to characterize confounding and confounders.

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629874269452

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

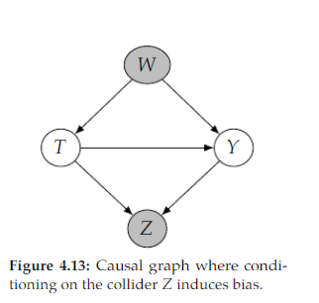

Open itTwo sources of bias: - common cause (confounding) - conditioning on common effect (selection bias)

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629876890892

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itWhy use a survey to ask customers about their experiences when data about customer interactions can be used to predict both satisfaction and the likelihood that a customer will remain loyal, bolt, or even increase business?

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7629879250188

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itIf we condition on a descendant of 𝑇 that isn’t a mediator, it could unblock a path from 𝑇 to 𝑌 that was blocked by a collider. For example, this is the case with conditioning on 𝑍 in Figure 4.13. This induces non-causal association between 𝑇 and 𝑌 , which biase

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7662640958732

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open iteural Turing Machines (Graves, Wayne, & Danihelka, 2014), Attention-Based RNN and its various implementations (e.g., Bahdanau, Cho, & Bengio, 2014; Luong, Pham, & Manning, 2015), or <span>Transformers (Vaswani et al., 2017). <span>

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Parent (intermediate) annotation

Open itres for every time-step. Calculations at timesteps t and t − 1 would be highly redundant: features at t represent the complete history until t and not only what happened in between t − 1 and t. <span>Generally speaking, explaining the predictions of vector-based methods is more difficult than often as- sumed. This holds even for linear models like logistic regression. Features are often preprocessed, for example to binarize counts (Sec. 2). Furthermore, they are typically strongly correlated, making it troublesome to interpret individual coefficients [6]. Table 3 shows exemplary features weights in a logistic regression model used to predict order probabilities. If hundreds of features are utilized and are correlated and preprocessed, explaining the impact of consumer actions becomes a complex and confusing task <span>