Edited, memorised or added to reading queue

on 13-Apr-2022 (Wed)

Do you want BuboFlash to help you learning these things? Click here to log in or create user.

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7070610492684

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

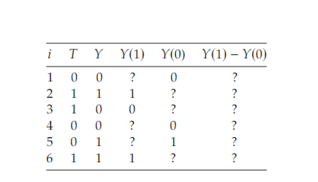

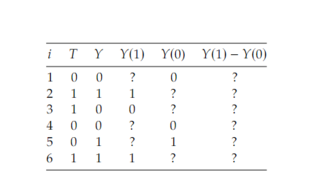

Open itThe Fundamental Problem of Causal Inference: It is impossible to observe all potential outcomes for a given individual

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7070612065548

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

Open itThe Fundamental Problem of Causal Inference: It is impossible to observe all potential outcomes for a given individual

Original toplevel document (pdf)

cannot see any pdfsFlashcard 7070618094860

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Flashcard 7070629104908

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7070653746444

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

Parent (intermediate) annotation

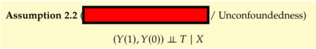

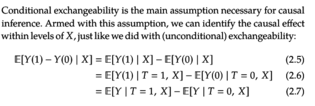

Open itThe ignorability assumption is used in Equation 2.3. We will talk more about Equation 2.4 when we get to Section 2.3.5. Another perspective on this assumption is that of exchangeability.

Original toplevel document (pdf)

cannot see any pdfs| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

Flashcard 7070670523660

| status | not learned | measured difficulty | 37% [default] | last interval [days] | |||

|---|---|---|---|---|---|---|---|

| repetition number in this series | 0 | memorised on | scheduled repetition | ||||

| scheduled repetition interval | last repetition or drill |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |

| status | not read | reprioritisations | ||

|---|---|---|---|---|

| last reprioritisation on | suggested re-reading day | |||

| started reading on | finished reading on |